Traveling Waves Encode the Recent Past and Enhance Sequence Learning

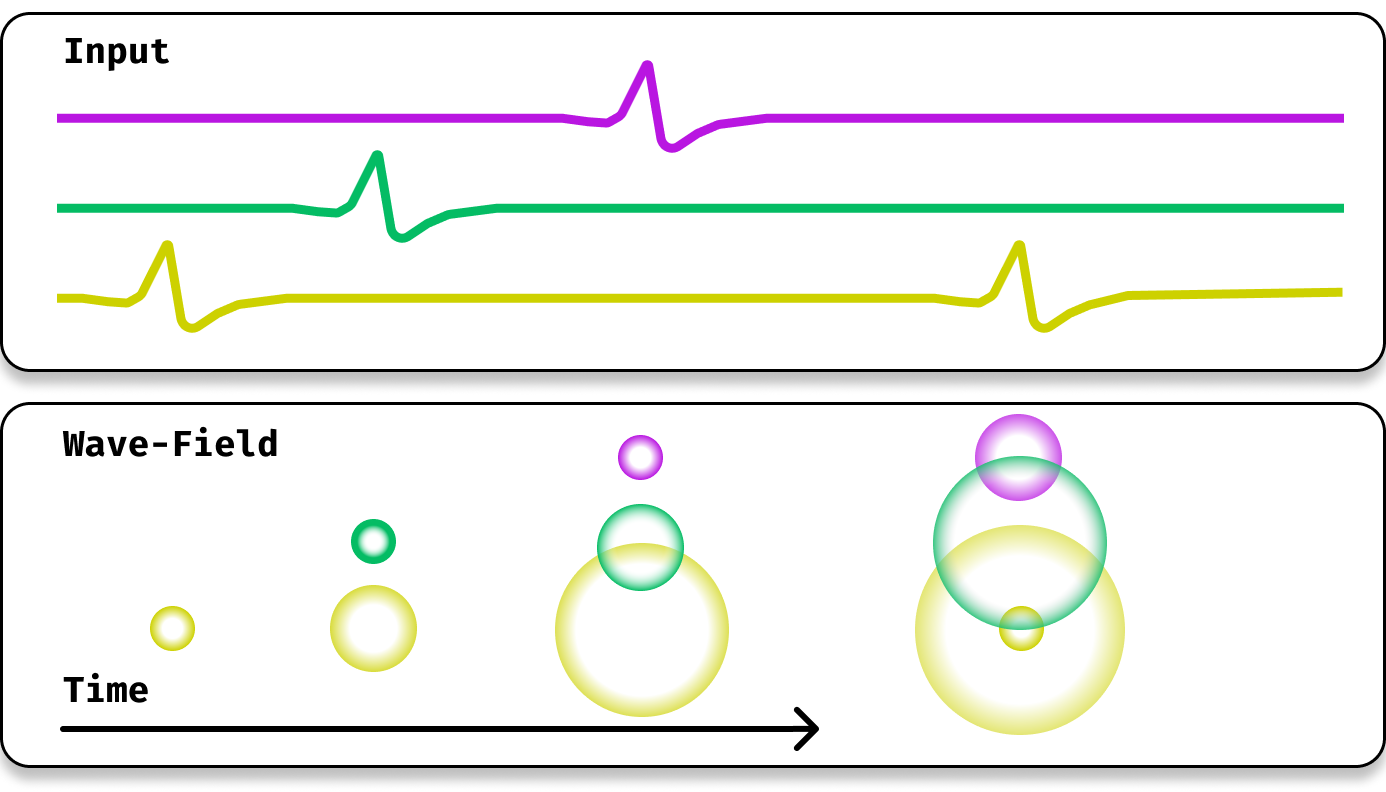

Illustration of three input signals (top) and a corresponding wave-field with induced traveling waves (bottom). From an instantaneous snapshot of the wave-field at each timestep we are able decode both the time of onset and input channel of each input spike. Furthermore, subsequent spikes in the same channel do not overwrite one-another.

Illustration of three input signals (top) and a corresponding wave-field with induced traveling waves (bottom). From an instantaneous snapshot of the wave-field at each timestep we are able decode both the time of onset and input channel of each input spike. Furthermore, subsequent spikes in the same channel do not overwrite one-another.

Traveling waves of neural activity have been observed throughout the brain at a diversity of regions and scales; however, their precise computational role is still debated. One physically grounded hypothesis suggests that the cortical sheet may act like a wave-field capable of storing a short-term memory of sequential stimuli through induced waves traveling across the cortical surface. To date, however, the computational implications of this idea have remained hypothetical due to the lack of a simple recurrent neural network architecture capable of exhibiting such waves. In this work, we introduce a model to fill this gap, which we denote the Wave-RNN (wRNN), and demonstrate how both connectivity constraints and initialization play a crucial role in the emergence of wave-like dynamics. We then empirically show how such an architecture indeed efficiently encodes the recent past through a suite of synthetic memory tasks where wRNNs learn faster and perform significantly better than wave-free counterparts. Finally, we explore the implications of this memory storage system on more complex sequence modeling tasks such as sequential image classification and find that wave-based models not only again outperform comparable wave-free RNNs while using significantly fewer parameters, but additionally perform comparably to more complex gated architectures such as LSTMs and GRUs. We conclude with a discussion of the implications of these results for both neuroscience and machine learning.

T. Anderson Keller, Lyle Muller, Terrence Sejnowski, and Max Welling

Accepted at ICLR 2024: https://openreview.net/forum?id=p4S5Z6Sah4

https://iclr.cc/media/PosterPDFs/ICLR%202024/17796.png?t=1715213727.2184942

https://github.com/akandykeller/Wave_RNNs

Tweet-print

Traveling waves are indicative of conserved quantities. In the brain, there is undeniable evidence for traveling waves of neural activity -- but what is the brain trying to conserve?

In our ICLR paper with @wellingmax, @_mullerlab, & @sejnowski, we ask: could it be memory?

🌊/9 pic.twitter.com/pZaG5FslG8— Andy Keller (@t_andy_keller) May 10, 2024