Locally Coupled Oscillatory Recurrent Networks Learn Topographic Organization

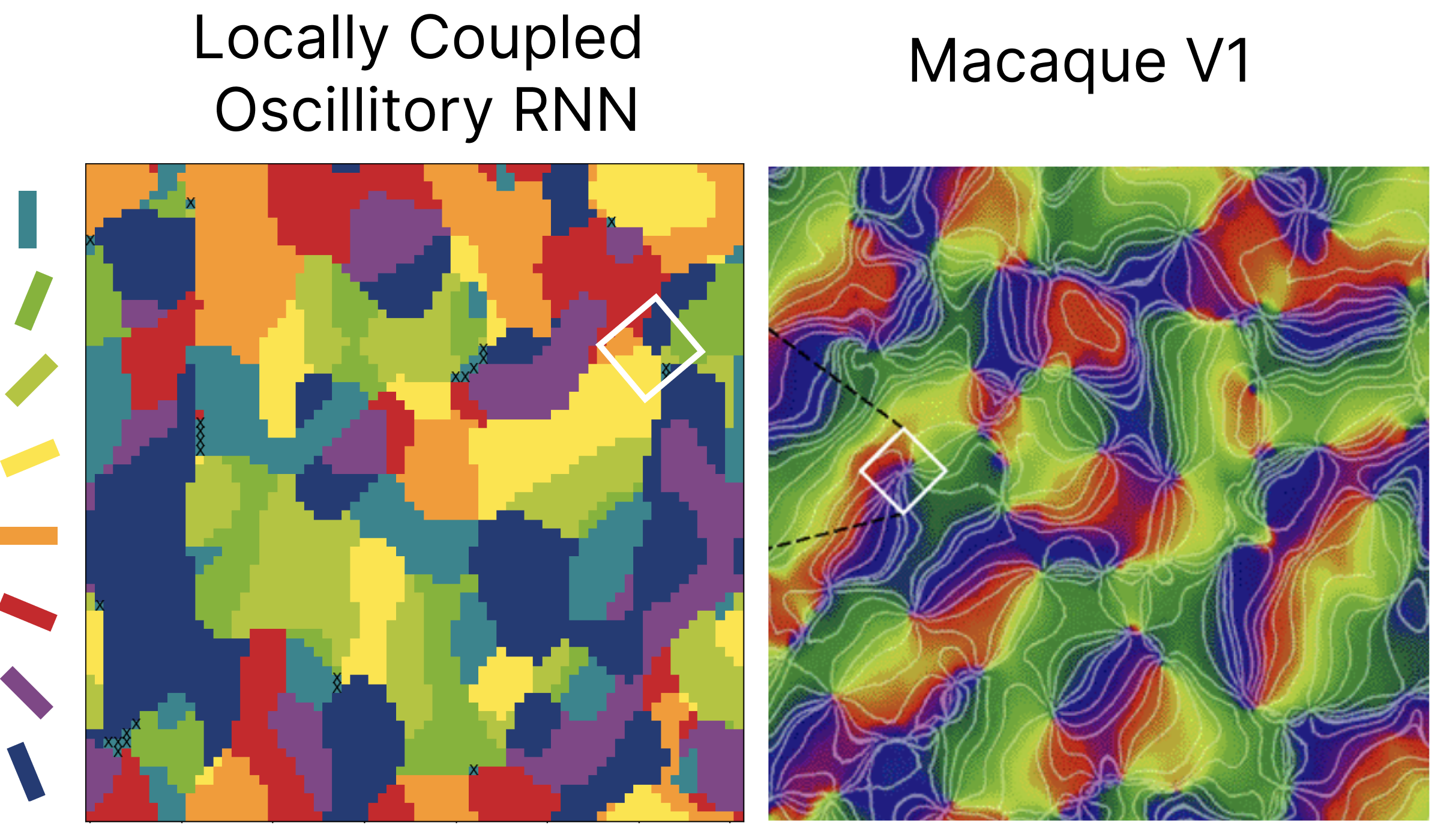

Measured orientation selectivity of neurons, as color coded by the bars on the left. We see our LocoRNN’s simulated cortical sheet learns selectivity reminiscent of the orientation columns observed in the Macaque primary visual cortex (source: Principles of Neural Science. E. Kandel, J. Schwartz, T. Jessell, S. Siegelbaum, & A. Hudspeth. 2013.).

Measured orientation selectivity of neurons, as color coded by the bars on the left. We see our LocoRNN’s simulated cortical sheet learns selectivity reminiscent of the orientation columns observed in the Macaque primary visual cortex (source: Principles of Neural Science. E. Kandel, J. Schwartz, T. Jessell, S. Siegelbaum, & A. Hudspeth. 2013.).

Complex spatio-temporal neural population dynamics such as traveling waves are known to exist across multiple brain regions (Muller et al. 2014), and have been hypothesized to play diverse roles from information transfer to long-term memory consolidation. To-date, however, the empirical validation of these computational hypotheses has been hindered by the lack of a flexible and efficiently trainable model of such behavior. In this work, we introduce the Locally Coupled Oscillatory Recurrent Neural Network (LocoRNN), and show that it indeed learns to leverage traveling waves, and other well known coordinated dynamics of coupled oscillators (Kuramoto), in the service of structured sequence modeling. However, unlike previous models of such dynamics, we show that our model remains a flexible, trainable, sequence model competitive with state of the art on benchmarks such as the Hamiltonian Dynamics Forecasting Suite. Furthermore, when trained to model simple image sequences such as simulated retinal waves, we see that the orientation selectivity of hidden neurons becomes topographically organized, while such organization is absent when trained on unstructured noise. The resulting organization is reminiscent of orientation columns observed in the visual cortex and in line with prior work on activity-dependant organization in the visual system during development (Ackman et al. 2012). Due to local connectivity, our model is both more biologically plausible and parameter efficient than its globally coupled counterpart, the coRNN, while also being substantially more amenable to gradient-based training than recent spiking neural network counterparts due to provably bounded gradients. Overall, we believe our results highlight the value of the LocoRNN as a novel tool for investigating the diversity of hypothesized roles of synchronous neural dynamics and their impact on computation.

T. Anderson Keller and Max Welling

Accepted at COSYNE 2023 (Poster)

Conference Abstract: https://akandykeller.github.io/papers/LocoRNN.pdf